参考文章

https://zhuanlan.zhihu.com/p/639042196

https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/high-availability/

https://www.durongjie.com/article/2025/09/16/wei-k8s-apiserver-bu-shu-gao-ke-yong-fu-zai-jun-heng/

服务器基本信息

| Hostname | IP | CPU | 内存 | 硬盘 |

|---|---|---|---|---|

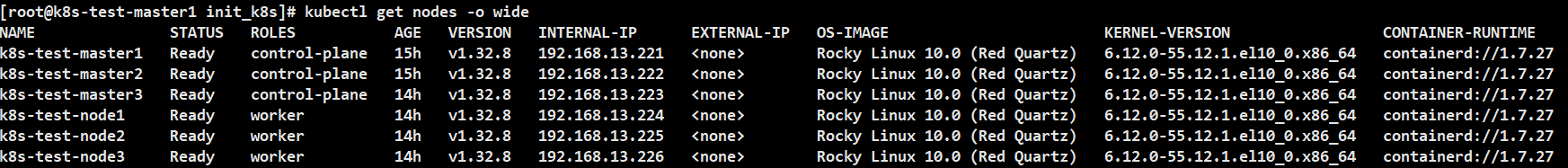

| k8s-test-master1 | 192.168.13.221 | 2核 | 4G | 100G |

| k8s-test-master2 | 192.168.13.222 | 2核 | 4G | 100G |

| k8s-test-master3 | 192.168.13.223 | 2核 | 4G | 100G |

| k8s-test-node1 | 192.168.13.224 | 2核 | 4G | 100G |

| k8s-test-node2 | 192.168.13.225 | 2核 | 4G | 100G |

| k8s-test-node3 | 192.168.13.226 | 2核 | 4G | 100G |

基础配置

每台都需要执行

# 配置hostname(根据实际修改)

hostnamectl set-hostname k8s-test-master1 && bash

# 开启防火墙ssh

iptables -I INPUT 5 -s 192.168.13.221/32 -m comment --comment "k8s-test-cluster" -j ACCEPT

iptables -I INPUT 5 -s 192.168.13.222/32 -m comment --comment "k8s-test-cluster" -j ACCEPT

iptables -I INPUT 5 -s 192.168.13.223/32 -m comment --comment "k8s-test-cluster" -j ACCEPT

iptables -I INPUT 5 -s 192.168.13.224/32 -m comment --comment "k8s-test-cluster" -j ACCEPT

iptables -I INPUT 5 -s 192.168.13.225/32 -m comment --comment "k8s-test-cluster" -j ACCEPT

iptables -I INPUT 5 -s 192.168.13.226/32 -m comment --comment "k8s-test-cluster" -j ACCEPT

iptables -I INPUT 5 -s 10.200.0.0/16 -m comment --comment "k8s-test-cluster-pod" -j ACCEPT

iptables -I INPUT 5 -s 10.201.0.0/16 -m comment --comment "k8s-test-cluster-service" -j ACCEPT

service iptables save配置ssh免密

仅需master1上执行

# 配置本地host

cat >> /etc/hosts << EOF

# K8s集群节点

192.168.13.221 k8s-test-master1

192.168.13.222 k8s-test-master2

192.168.13.223 k8s-test-master3

192.168.13.224 k8s-test-node1

192.168.13.225 k8s-test-node2

192.168.13.226 k8s-test-node3

EOF

# 生成 RSA 4096(兼容旧系统)

ssh-keygen -t rsa -b 4096 -o -a 100 -C "k8s-test-cluster" -f ~/.ssh/id_rsa

# 加入key

ssh-copy-id k8s-test-master1

ssh-copy-id k8s-test-master2

ssh-copy-id k8s-test-master3

ssh-copy-id k8s-test-node1

ssh-copy-id k8s-test-node2

ssh-copy-id k8s-test-node3按照配置ansible

仅需master1上执行

yum install -y python3-devel

pip3 install ansible

mkdir -p /etc/ansible

cat >> /etc/profile << EOF

export ANSIBLE_PYTHON_INTERPRETER=/usr/bin/python3

export ANSIBLE_HOST_KEY_CHECKING=False

EOF

source /etc/profile

cat >> /etc/ansible/hosts << EOF

[k8s_test_master]

192.168.13.221

192.168.13.222

192.168.13.223

[k8s_test_node]

192.168.13.224

192.168.13.225

192.168.13.226

EOF使用ansible批量操作

ansible操作均在master1上执行

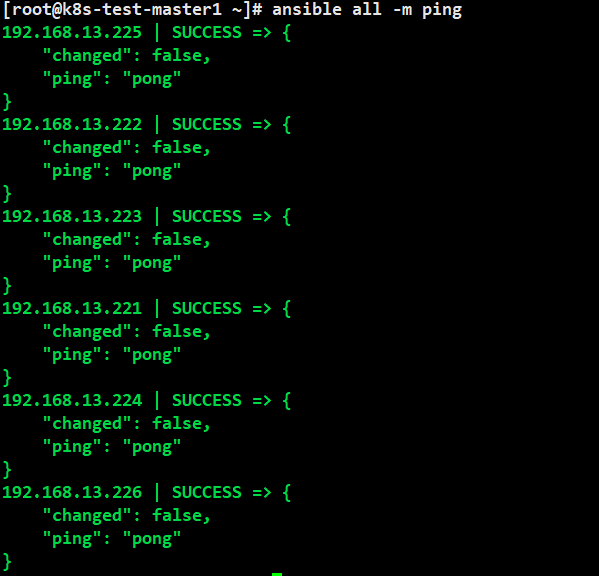

测试连通性

测试集群所有主机连接:ansible all -m ping

初始化集群节点

---

- name: 初始化集群节点

hosts: all

become: yes

tasks:

- name: 配置文件禁用SElinux

lineinfile:

path: /etc/selinux/config

regexp: '^SELINUX='

line: 'SELINUX=disabled'

state: present

- name: 检查当前SELinux状态

command: getenforce

register: selinux_status

changed_when: false

ignore_errors: yes

- name: 临时设置为permissive模式(如果当前是enforcing)

command: setenforce 0

when: "'Enforcing' in selinux_status.stdout"

- name: 禁用swap

command: swapoff -a

ignore_errors: yes

- name: 永久注释掉 /etc/fstab 中的 swap 行

ansible.builtin.replace:

path: /etc/fstab

regexp: '^([^#].*swap.*)'

replace: '#\1'

- name: 配置YUM源并安装基础软件

hosts: all

become: yes

tasks:

- name: 安装yum-utils

yum:

name: yum-utils

state: present

- name: 添加Docker CE源

shell: yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

- name: 配置Kubernetes源

copy:

dest: /etc/yum.repos.d/kubernetes.repo

content: |

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

- name: 生成缓存并且安装软件

yum:

name:

- bash-completion

- device-mapper-persistent-data

- lvm2

- wget

- net-tools

- nfs-utils

- lrzsz

- gcc

- gcc-c++

- make

- cmake

- libxml2-devel

- openssl-devel

- curl

- curl-devel

- unzip

- sudo

- libaio-devel

- vim

- ncurses-devel

- autoconf

- automake

- zlib-devel

- python-devel

- epel-release

- openssh-server

- socat

- ipvsadm

- conntrack

- telnet

state: present

update_cache: yes

- name: 配置k8s内核参数

hosts: all

become: yes

tasks:

- name: 加载br_netfilter模块

command: modprobe br_netfilter

- name: 设置开机自动加载

lineinfile:

path: /etc/profile

line: "modprobe br_netfilter"

create: yes

- name: 配置k8s内核参数

copy:

dest: /etc/sysctl.d/k8s.conf

content: |

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

- name: 应用内核参数

command: sysctl -p /etc/sysctl.d/k8s.conf复制以上yaml到1_init.yaml,执行playbook:ansible-playbook 1_init.yaml

安装containerd

---

- name: 安装containerd

hosts: all

become: yes

tasks:

# 安装指定版本的containerd

- name: Install containerd.io

yum:

name: containerd.io

state: present

# 创建containerd配置目录

- name: Create /etc/containerd directory

file:

path: /etc/containerd

state: directory

mode: '0755'

# 生成默认配置

- name: Backup existing config if exists

copy:

src: /etc/containerd/config.toml

dest: /etc/containerd/config.toml.bak

remote_src: yes

ignore_errors: yes

# 生成默认配置

- name: Generate default containerd config

shell: containerd config default > /etc/containerd/config.toml

# 修改SystemdCgroup设置

- name: Enable SystemdCgroup

replace:

path: /etc/containerd/config.toml

regexp: 'SystemdCgroup = false'

replace: 'SystemdCgroup = true'

# 修改sandbox镜像源

- name: Update sandbox image registry

replace:

path: /etc/containerd/config.toml

regexp: 'sandbox_image = "k8s.gcr.io/"'

replace: 'sandbox_image = "registry.aliyuncs.com/google_containers/"'

# 修改containerd数据目录

- name: Change containerd root directory

replace:

path: /etc/containerd/config.toml

regexp: 'root = "/var/lib/containerd"'

replace: 'root = "/data/containerd"'

# 配置镜像加速器

- name: Configure mirror registry

replace:

path: /etc/containerd/config.toml

regexp: 'config_path = ""'

replace: 'config_path = "/etc/containerd/certs.d"'

# 创建镜像加速器配置目录

- name: Create certs.d directory

file:

path: /etc/containerd/certs.d/docker.io

state: directory

mode: '0755'

# 配置镜像加速器hosts.toml文件

- name: Configure mirror registry hosts.toml

copy:

dest: /etc/containerd/certs.d/docker.io/hosts.toml

content: |

[host."https://vh3bm52y.mirror.aliyuncs.com",host."https://registry.docker-cn.com","https://hub.bktencent.com"]

# 按情况自行选择是否把PUSH也加上去

capabilities = ["pull","push"]

mode: '0644'

# 创建crictl配置文件

- name: Configure crictl.yaml

copy:

dest: /etc/crictl.yaml

content: |

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false

mode: '0644'

# 启用并启动containerd服务

- name: Enable and start containerd service

systemd:

name: containerd

enabled: yes

state: started

# 检查containerd服务状态

- name: Check containerd service status

command: systemctl status containerd

register: containerd_status

changed_when: false # 此任务不会导致系统状态改变

- name: Display containerd service status

debug:

var: containerd_status.stdout_lines

复制以上yaml到2_install_containerd.yaml,执行playbook:ansible-playbook 2_install_containerd.yaml

安装kubelet和kubeadm

---

- name: 在所有节点安装kubelet和kubeadm

hosts: all # 针对所有节点

become: yes # 使用root权限

tasks:

- name: 创建 kubernetes.repo 文件

ansible.builtin.copy:

dest: /etc/yum.repos.d/kubernetes.repo

content: |

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.32/rpm/

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.32/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

- name: 安装 kubelet/kubeadm(跳过 excludes 限制)

ansible.builtin.yum:

name:

- kubelet

- kubeadm

state: present

disable_excludes: kubernetes # 关键参数,等同于 --disableexcludes=kubernetes

disable_gpg_check: yes # 如需跳过 GPG 检查可启用

- name: 启用kubelet服务

systemd:

name: kubelet

enabled: yes

- name: 仅在master节点安装kubectl和配置补全

hosts: k8s_test_master # 仅针对master节点

become: yes

tasks:

- name: 安装kubectl和bash-completion

yum:

name:

- kubectl

- bash-completion

state: present

disable_excludes: kubernetes # 关键参数,等同于 --disableexcludes=kubernetes

disable_gpg_check: yes # 如需跳过 GPG 检查可启用

- name: 创建bash补全目录

file:

path: /etc/bash_completion.d/

state: directory

mode: 0755

- name: 生成kubectl bash补全脚本

shell: kubectl completion bash > /etc/bash_completion.d/kubectl

args:

creates: /etc/bash_completion.d/kubectl # 幂等性检查

- name: 立即加载bash补全

shell: source /etc/bash_completion.d/kubectl

register: source_result

changed_when: false # 此操作不产生实际变更

ignore_errors: yes # source命令可能在某些情况下失败复制以上yaml到3_install_kube_tools.yaml,执行playbook:ansible-playbook 3_install_kube_tools.yaml

初始化集群

使用kubeadm生成初始化安装k8s的默认yml配置文件,在Master节点k8s-test-master1生成:kubeadm config print init-defaults > kubeadm.yaml

根据实际情况编辑文件:

apiVersion: kubeadm.k8s.io/v1beta4

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

# 当前节点IP

advertiseAddress: 192.168.13.221

bindPort: 6443

nodeRegistration:

# 指定容器运行的socket文件

criSocket: unix:///run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

imagePullSerial: true

# 当前节点主机名

name: k8s-test-master1

taints: null

timeouts:

controlPlaneComponentHealthCheck: 4m0s

discovery: 5m0s

etcdAPICall: 2m0s

kubeletHealthCheck: 4m0s

kubernetesAPICall: 1m0s

tlsBootstrap: 5m0s

upgradeManifests: 5m0s

---

apiServer:

certSANs:

- "k8s-test-lb.example.net" # API Server 域名

- "192.168.13.221"

- "192.168.13.222"

- "192.168.13.223"

apiVersion: kubeadm.k8s.io/v1beta4

caCertificateValidityPeriod: 87600h0m0s

certificateValidityPeriod: 8760h0m0s

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

# 设置负载地址

controlPlaneEndpoint: "k8s-test-lb.example.net:8443"

controllerManager: {}

dns: {}

encryptionAlgorithm: RSA-2048

etcd:

local:

dataDir: /var/lib/etcd

# 镜像地址使用阿里云加速

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.32.0

networking:

dnsDomain: cluster.local

# Service 网段(注意不要与 Pod 网段冲突)

serviceSubnet: 10.201.0.0/16

# Pod 网段(与 CNI 插件配置一致,比如 Calico/Flannel)

podSubnet: 10.200.0.0/16

proxy: {}

scheduler: {}

# 追加以下内容

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

# 使用ipvs替代默认的iptables

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

# 与containerd一致使用systemd作为Cgroup驱动

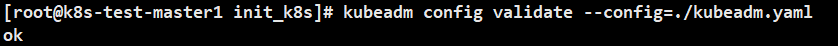

cgroupDriver: systemd验证配置文件是否正确:kubeadm config --config=./kubeadm.yaml validate

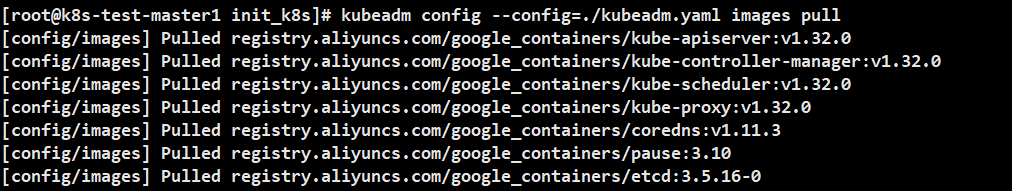

根据配置文件拉取镜像:kubeadm config --config=./kubeadm.yaml images pull

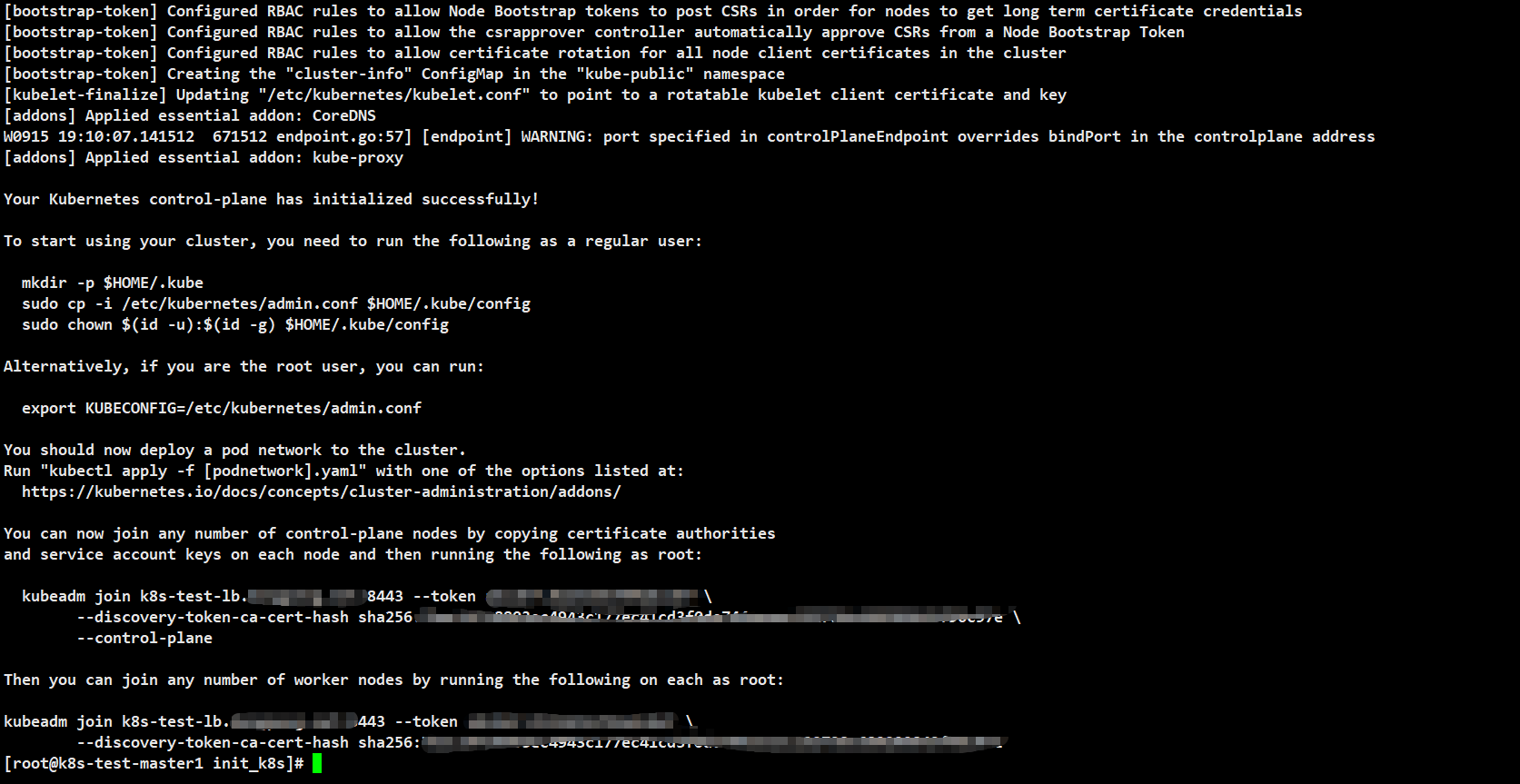

根据配置初始化集群:kubeadm init --config=./kubeadm.yaml

如果按照有问题需要重新配置,可以使用:kubeadm reset重置

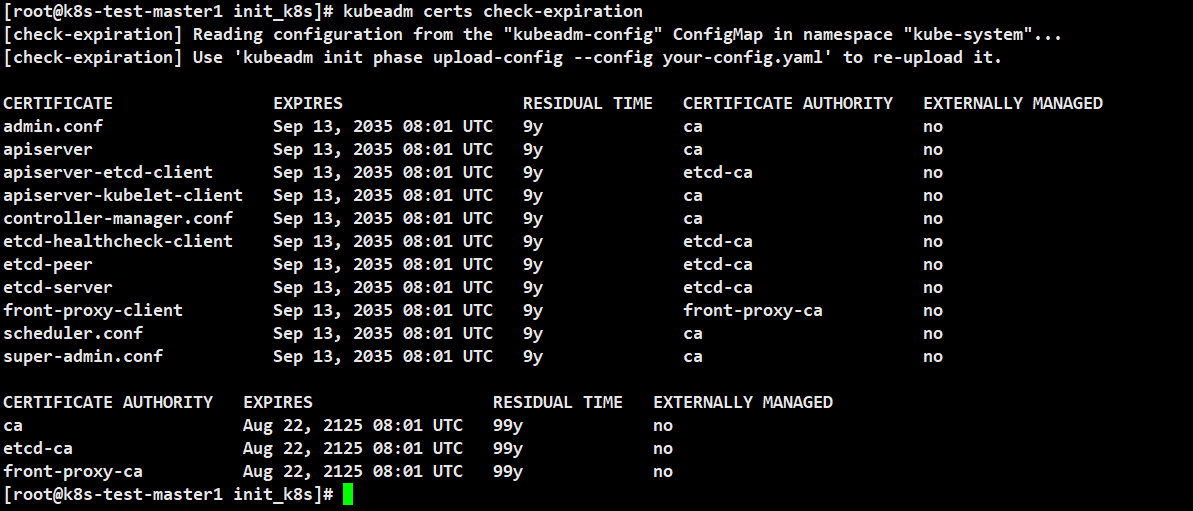

查看证书有效期:kubeadm certs check-expiration

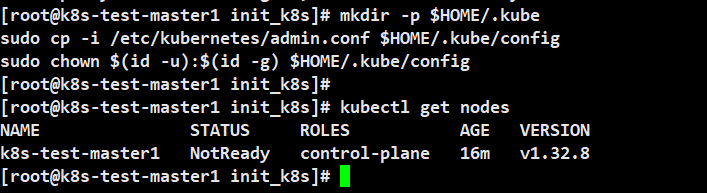

配置kubectl

# 配置kubectl的配置文件config,相当于对kubectl进行授权,这样kubectl命令可以使用这个证书对k8s集群进行管理

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 查看节点状态

kubectl get nodes

新增节点

获取新 Token 和完整 join 命令:kubeadm token create --print-join-command

添加master节点

新增master节点上,拉取证书文件

scp k8s-test-master1:/etc/kubernetes/pki/ca.* /etc/kubernetes/pki/

scp k8s-test-master1:/etc/kubernetes/pki/sa.* /etc/kubernetes/pki/

scp k8s-test-master1:/etc/kubernetes/pki/front-proxy-ca.* /etc/kubernetes/pki/

scp k8s-test-master1:/etc/kubernetes/pki/etcd/ca.* /etc/kubernetes/pki/etcd加入master需要添加--control-plane参数:kubeadm join k8s-test-lb.example.net:8443 --token ckbihv.ldca9ld502p5h835 --discovery-token-ca-cert-hash sha256:7bac19718803ec4943c177ec41cd3f0de744aeaac132738c689299843f96c97e --control-plane

添加worker节点

通过master1的ansible批量执行

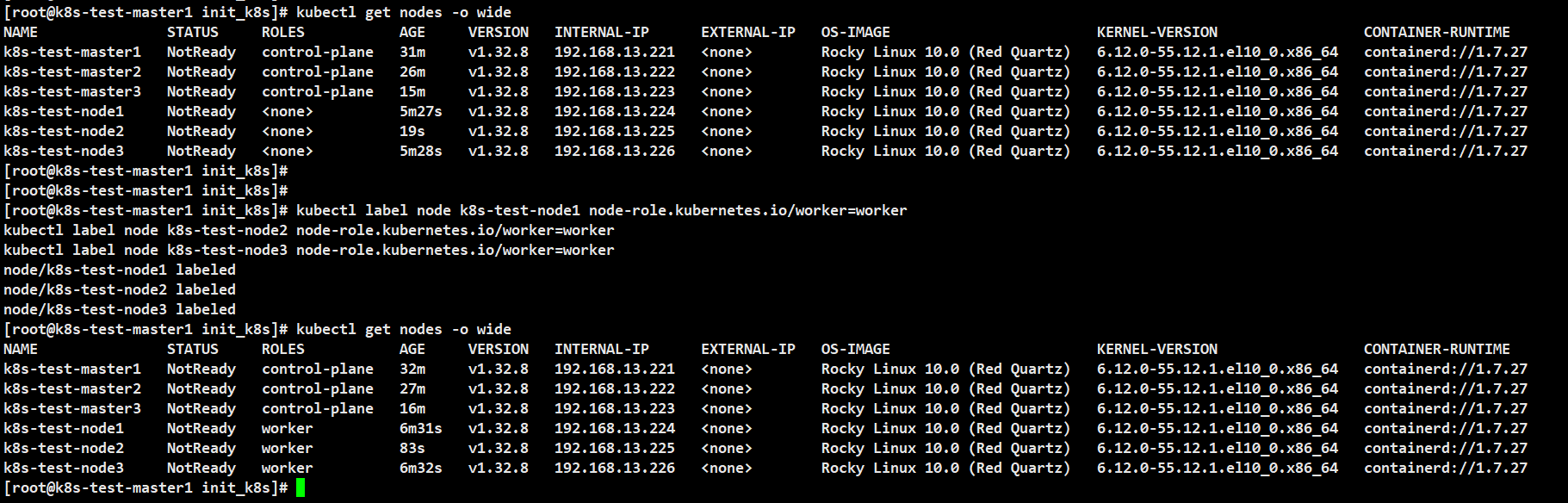

ansible k8s_test_node -m shell -a "kubeadm join k8s-test-lb.example.net:8443 --token ckbihv.ldca9ld502p5h835 --discovery-token-ca-cert-hash sha256:7bac19718803ec4943c177ec41cd3f0de744aeaac132738c689299843f96c97e"查看状态

标记节点:

kubectl label node k8s-test-node1 node-role.kubernetes.io/worker=worker

kubectl label node k8s-test-node2 node-role.kubernetes.io/worker=worker

kubectl label node k8s-test-node3 node-role.kubernetes.io/worker=worker

kubectl get nodes -o wide

可以看到各个节点的状态还是处于NotReady,需要安装网络插件打通网络

安装网络组件

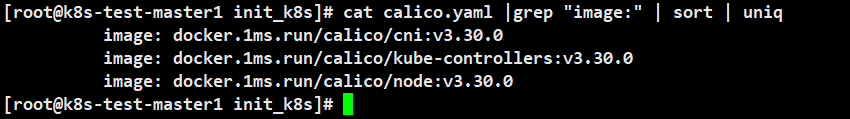

下载并修改cailco.yml内容:

wget https://raw.githubusercontent.com/projectcalico/calico/v3.30.0/manifests/calico.yaml

# 修改镜像地址

sed -i 's@image: docker.io/@image: docker.1ms.run/@g' calico.yaml

cat calico.yaml |grep "image:" | sort | uniq

修改配置为与POD网段一致(默认为192.168.0.0/16):

- name: CALICO_IPV4POOL_CIDR

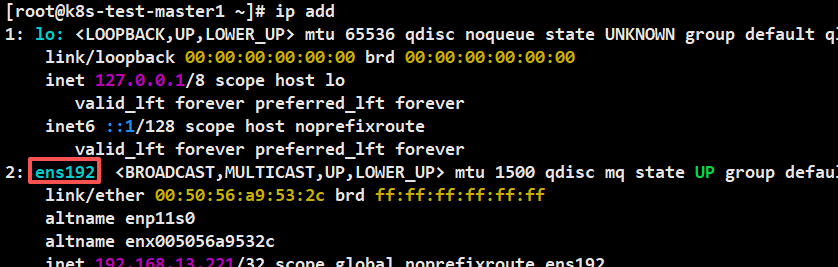

value: "10.200.0.0/16"修改接口匹配规则,根据实际接口匹配:

- name: CLUSTER_TYPE

value: "k8s,bgp"

# 添加以下配置,匹配实际的接口

- name: IP_AUTODETECTION_METHOD

value: "interface=ens192"

# Auto-detect the BGP IP address.

- name: IP

value: "autodetect"启动:kubectl apply -f calico.yaml

查看系统容器运行状态:kubectl get pod -o wide -n kube-system

查看节点运行状态:kubectl get nodes -o wide

测试网络是否正常

ctr images pull docker.1ms.run/library/busybox:1.28

kubectl run busybox --image docker.1ms.run/library/busybox:1.28 --image-pull-policy=IfNotPresent --restart=Never --rm -it busybox -- sh

ping www.baidu.com

若你觉得我的文章对你有帮助,欢迎点击上方按钮对我打赏